Machine learning takes starring role in exploring the universe

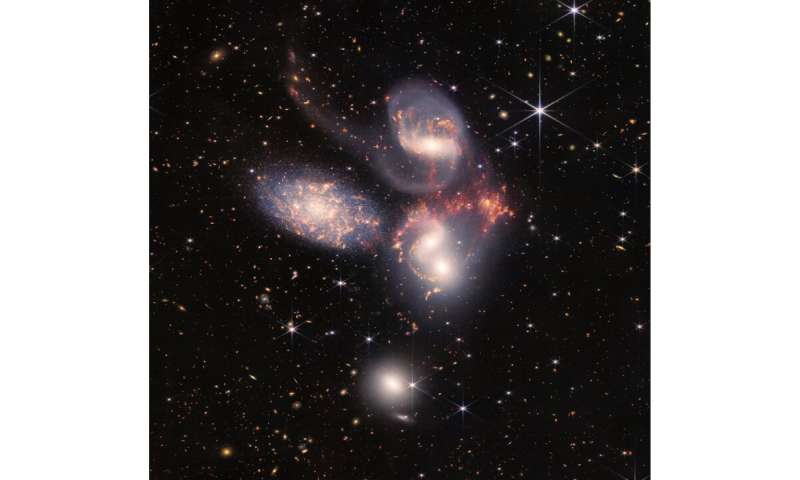

The intricate, beautiful images of the universe streaming from the James Webb Space Telescope (JWST) are more than just pretty pixels that find their way onto computer or smartphone screens. These images represent data—lots and lots of data; in fact, the JWST delivers approximately 235 gigabytes of science data every day—about the same amount of data in a 10-day high def movie binge watching session.

JWST and other telescopes and sensors have provided today's astronomers with an ever-growing stream of data. These sources give astronomers the unprecedented ability to look deeper into space and farther back in time than ever before—to make new discoveries, including studying how stars die. Recent Penn State work using data from JWST may change the way scientists understand the origin of galaxies.

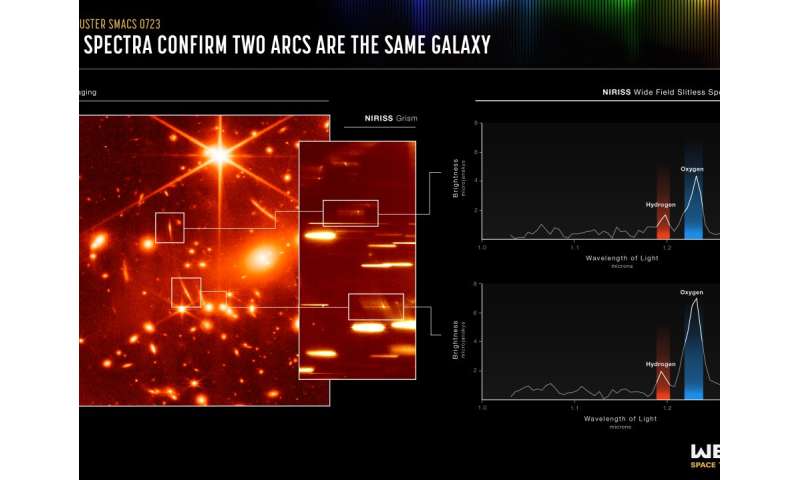

Managing all this data isn't without its problems, however. Astronomers must rely on supercomputers and advanced algorithms, referred to as machine learning, to take this flood of data and create accurate models of the vastness of space, unveil discoveries and inspire new questions, as well as create stunning pictures of the universe.

Joel Leja and V. Ashley Villar, both assistant professors of astronomy and astrophysics and ICDS co-hires, are among the scientists establishing Penn State as a leader in using machine learning techniques to better handle massive streams of data.

According to Leja, machine learning approaches allow researchers to crunch numbers more efficiently and accurately than previous methods. In some cases, such as interpreting galaxy imaging, these machine learning techniques can be nearly a million times faster than traditional analyses, he added.

Before the advent of machine learning, crunching data involved using analytical equations and compiling large amounts of data into tables. Researchers—often graduate students—would spend a considerable amount of time gathering and analyzing data. Without machine learning, calculations were often repetitive and time-consuming, and there was no efficient way to speed up the process.

Leja said it was a lot like planning a massively complicated trip.

"Let's say you're trying to find the best way from Los Angeles to San Francisco," said Leja. "Using the old techniques, we would make a list of roads, try every single route, calculate the whole distance on every tiny road—the small roads, the major highways, roundabout ways—and we would need to map every route, doing it one by one. It's not a very good way to do it. It typically gets you the right answer, but machine learning tries to do this in a much smarter way using data—for example, it might instead use millions of previous travel routes and just quickly ask which one is fastest."

Machine learning doesn't just cut back on human labor, the approaches can cut down on computational labor, which, in turn, saves energy, according to Villar.

"The human labor issue is important, but we also have to consider the computer labor problem," said Villar. "It's using so many hours of computational time, which also means it is using a lot of energy."

Field changer

That computational savings is often hard to comprehend, but it is creating a new paradigm in astronomical discovery, according to the astronomers.

"Machine learning is completely changing my field," said Leja. "It just processes enormous amounts of data and runs complex models really quickly, which is well suited to astronomical data that is flooding our systems right now."

The old process was also computationally unforgiving, said Leja, explaining his experience as a postdoc at Harvard.

"It took special access—and I had to spend a lot of time applying for and then running these simulations," said Leja. "And I could only run it once, which can be very scary for science. Ideally you want to run calculations many times to test out things, to try out new questions and make sure you get it right."

Now, astronomers can use machine learning techniques—like neural net emulators, which simulate on a computer the behavior of a neural network, a method inspired by the human brain for teaching computers to process data—to accomplish in a few weeks on a laptop what once took an enormous amount of time and huge computational resources just a few years ago.

As computers become faster and more powerful, and machine learning approaches improve, the researchers expect astronomers in the future may look at a week on a laptop as somewhat slow.

"There has been a speed up by a factor of about a million in my field," said Leja. "It blows my mind every time I think about it, and it lets us ask new science questions."

How ICDS helps with 'computational muscle'

ICDS is supporting the astronomers by putting computational muscle behind processing vast amounts of data collected by ever-more powerful sensors. The institute is getting ready to help scientists as these even larger data sources come online.

The Legacy Survey in Space or Time—or LSST—a next-generation survey, will produce about 15 terabytes of data each night over 10 years, according to Leja. As an example, a disc with a terabyte of storage could hold around 200,000 songs. The LSST may not be downloading 3 million songs a night, but the data it eventually provides will be music to astrophysicists' ears.

"If we tried to use standard techniques to interpret these images of galaxies, using the full dataset, it would take something like 380 years on the (ICDS) Roar cluster, or 100 billion CPU hours," said Leja. "But using the machine learning techniques that we've developed—this has been supported by ICDS directly—we can do it, if we got all of Roar, in about three and a half hours."

Villar said she is eager to use this power to shed light on star explosions, one of her areas of research.

"There's a lot of this LSST data that will come online that will include something like 5 billion galaxies," said Villar. "One thing that I'm interested in doing is using that data to study stars when they explode. So one thing that'd be really helpful is if we could very, very quickly get an idea of the history of that galaxy to understand, in a sense, the history of the star that exploded. And to do that, with traditional methods, it's just computationally infeasible. But with these new methods, it should take literally seconds to do each one."

Both Leja and Villar agree that ICDS resources—such as access to the Roar supercomputer and staff expertise—are important to conducting this type of research.

"The ICDS resources are completely essential to answering these questions," said Leja. "Part of the reason I think Penn State is an excellent place for this research is the awesome computational resources and the team at Roar. We use the cluster to do all of the training of our models. It's where we battle-test our models to get them ready for the real world. It's also where we generate all of our mock data that we learn about, or we train our algorithms on. Roar is an essential part of the workflow for my team."

Provided by Pennsylvania State University